简体中文 | English | Русский | 한국어 | 日本語

https://github.com/raulmur/ORB_SLAM2

https://github.com/Martin20150405/SLAM_AR_Android.git

The lowercase 's' in ORB-SLAM2s stands for Smart · Swift · Small, emphasizing enhanced intelligence, faster performance, and lighter weight compared to the original ORB-SLAM2.

This project uses lowercase 's'. Another paper with uppercase 'S' named ORB-SLAM2S:

(Y. Diao, R. Cen, F. Xue and X. Su, "ORB-SLAM2S: A Fast ORB-SLAM2 System with Sparse Optical Flow Tracking," 2021 13th International Conference on Advanced Computational Intelligence (ICACI), Wanzhou, China, 2021, pp. 160-165, doi: 10.1109/ICACI52617.2021.9435915.

keywords: {Visualization;Simultaneous localization and mapping;Cameras;Real-time systems;Aircraft navigation;Central Processing Unit;Trajectory;visual SLAM;real-time performance;trajectory accuracy},)

shares similar performance optimization concepts with this project, though its methods have not yet been integrated into this project. However, this paper can serve as a valuable reference for future optimization directions. Sincere gratitude to the scholars for their contributions and sharing.

ORB-SLAM2s is an enhanced spatial computing tool based on ORB-SLAM2 for Android. It supports real-time sparse point cloud mapping, map saving/loading, and relocation matching. By combining computer vision, inertial navigation (IMU), and Augmented Reality (AR) technologies, this project provides stable 6DoF (Six Degrees of Freedom) spatial positioning for mobile devices.

- Lightweight Design: Fewer dependencies and streamlined code, making it easier to compile and deploy on mobile platforms.

- About IMU & VIO Support:

- High Barrier of ORB-SLAM3: The VIO in the official implementation is tightly coupled, requiring high-quality IMU and strict Camera-IMU timestamp synchronization. This is common in robotics but difficult to achieve "out of the box" on Android phones via standard APIs.

- Android Reality: Most existing Android devices use asynchronous acquisition for Camera and IMU, lacking a unified hardware synchronization framework, and consumer-grade IMUs have significant noise/drift. Without strict calibration and synchronization, VIO is more prone to divergence than pure visual SLAM. The SENSOR_TIMESTAMP method is only available in Android 11 and later versions.

- Low Resource Consumption: Lower CPU and RAM requirements compared to ORB-SLAM3.

- Practical Efficiency: Performance in Monocular-only scenarios is comparable to ORB-SLAM3 for standard mobile use cases.

- Point Cloud SLAM Mapping: Real-time sparse mapping based on Monocular camera input.

- Map Persistence: Support for saving maps to local storage and reloading them for future sessions.

- Relocalization & Matching: Pose estimation and feature matching upon loading existing maps.

- Confidence Visualization: Visual tracking of keypoints and matching statistics between current frames and the loaded map.

- Plane Detection: Intelligent floor/surface detection based on current pose and point cloud data.

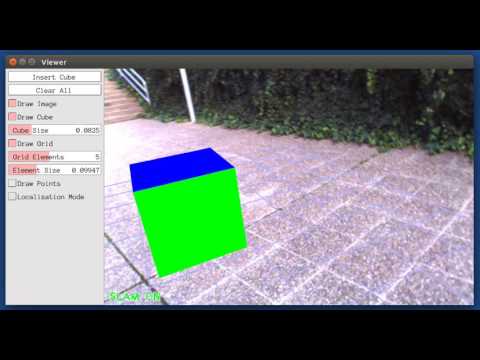

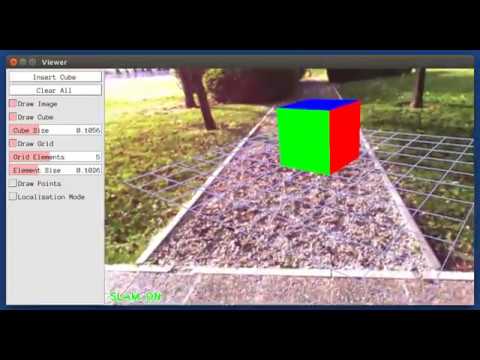

- Native AR Rendering: Basic AR implementation using OpenGL ES.

- Dark Frame Detection: Automatically skips dark or low-quality frames to prevent SLAM thread blocking.

- AR Object Management: Support for placing and interacting with 3D objects on detected planes.

Currently tested primarily on Qualcomm Snapdragon platform CPUs:

| SoC | Device | Performance |

|---|---|---|

| Snapdragon 8 Elite | Xiaomi 15 | 30 FPS |

| Snapdragon 8+ Gen1 | Redmi K60 | 30 FPS |

| Snapdragon 870 | Xiaomi 10S | 30 FPS |

| Snapdragon 835 | Xiaomi 6 | 15-20 FPS |

To ensure a smooth user experience, the system monitors exposure levels. When the environment is too dark, SLAM tracking is paused to avoid computational lag and "lost" states.

- Sparse Point Cloud SLAM Mapping

- Map Save/Load functionality

- Relocalization Matching

- Basic AR Rendering Engine

- Dark Frame Skip Logic

- 3D AR Object Management

- Simultaneous loading and matching of multiple map files

- Improve mapping speed and initialization.

- Enhance AR stability and 6DoF robustness.

- Optimize rendering pipeline for higher frame rates.

- Deepen sensor fusion (VIO - Visual Inertial Odometry).

- Integration with Unity3D.

- SLAM frequency downsampling and adaptive noise handling.

- Refined sensor gating logic.

This project is built upon the following excellent open-source libraries:

Authors: Raul Mur-Artal, Juan D. Tardos, J. M. M. Montiel and Dorian Galvez-Lopez (DBoW2)

ORB-SLAM2 is a real-time SLAM library for Monocular, Stereo and RGB-D cameras that computes the camera trajectory and a sparse 3D reconstruction (in the stereo and RGB-D case with true scale). It is able to detect loops and relocalize the camera in real time. We provide examples to run the SLAM system in the KITTI dataset as stereo or monocular, in the TUM dataset as RGB-D or monocular, and in the EuRoC dataset as stereo or monocular.

[Monocular] Raúl Mur-Artal, J. M. M. Montiel and Juan D. Tardós. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Transactions on Robotics, vol. 31, no. 5, pp. 1147-1163, 2015. (2015 IEEE Transactions on Robotics Best Paper Award). PDF.

[Stereo and RGB-D] Raúl Mur-Artal and Juan D. Tardós. ORB-SLAM2: an Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras. IEEE Transactions on Robotics, vol. 33, no. 5, pp. 1255-1262, 2017. PDF.

[DBoW2 Place Recognizer] Dorian Gálvez-López and Juan D. Tardós. Bags of Binary Words for Fast Place Recognition in Image Sequences. IEEE Transactions on Robotics, vol. 28, no. 5, pp. 1188-1197, 2012. PDF

The ORB-SLAM2 core library is released under a GPLv3 license. For a list of all code/library dependencies (and associated licenses), please see Dependencies.md.

For a closed-source version of ORB-SLAM2 for commercial purposes, please contact the authors: orbslam (at) unizar (dot) es.

This Android adaptation and enhancement project (ORB-SLAM2s) is also licensed under the GPL-3.0 License. See the LICENSE.txt and License-gpl.txt files for details.

For project collaboration or other field cooperation inquiries, please contact: OlscStudio@outlook.com

If you use ORB-SLAM2 (Monocular) in an academic work, please cite:

@article{murTRO2015,

title={{ORB-SLAM}: a Versatile and Accurate Monocular {SLAM} System},

author={Mur-Artal, Ra\'ul, Montiel, J. M. M. and Tard\'os, Juan D.},

journal={IEEE Transactions on Robotics},

volume={31},

number={5},

pages={1147--1163},

doi = {10.1109/TRO.2015.2463671},

year={2015}

}

if you use ORB-SLAM2 (Stereo or RGB-D) in an academic work, please cite:

@article{murORB2,

title={{ORB-SLAM2}: an Open-Source {SLAM} System for Monocular, Stereo and {RGB-D} Cameras},

author={Mur-Artal, Ra\'ul and Tard\'os, Juan D.},

journal={IEEE Transactions on Robotics},

volume={33},

number={5},

pages={1255--1262},

doi = {10.1109/TRO.2017.2705103},

year={2017}

}

If you use this Android adaptation (ORB-SLAM2s) in an academic work, please acknowledge this work appropriately.

We use OpenCV to manipulate images and features. Dowload and install instructions can be found at: http://opencv.org. Required at leat 4.5.0.

Required by g2o (see below). Download and install instructions can be found at: http://eigen.tuxfamily.org.

We use modified versions of the DBoW2 library to perform place recognition and g2o library to perform non-linear optimizations. Both modified libraries (which are BSD) are included in the Thirdparty folder.

Multilingual translation of this project is provided by Qwen3. Please excuse any errors and submit issues if found.

Reference: https://github.com/Olsc/Android_3dof

Reference: https://github.com/ZUXTUO/Android_6dof

This project has not yet completed research and integration.