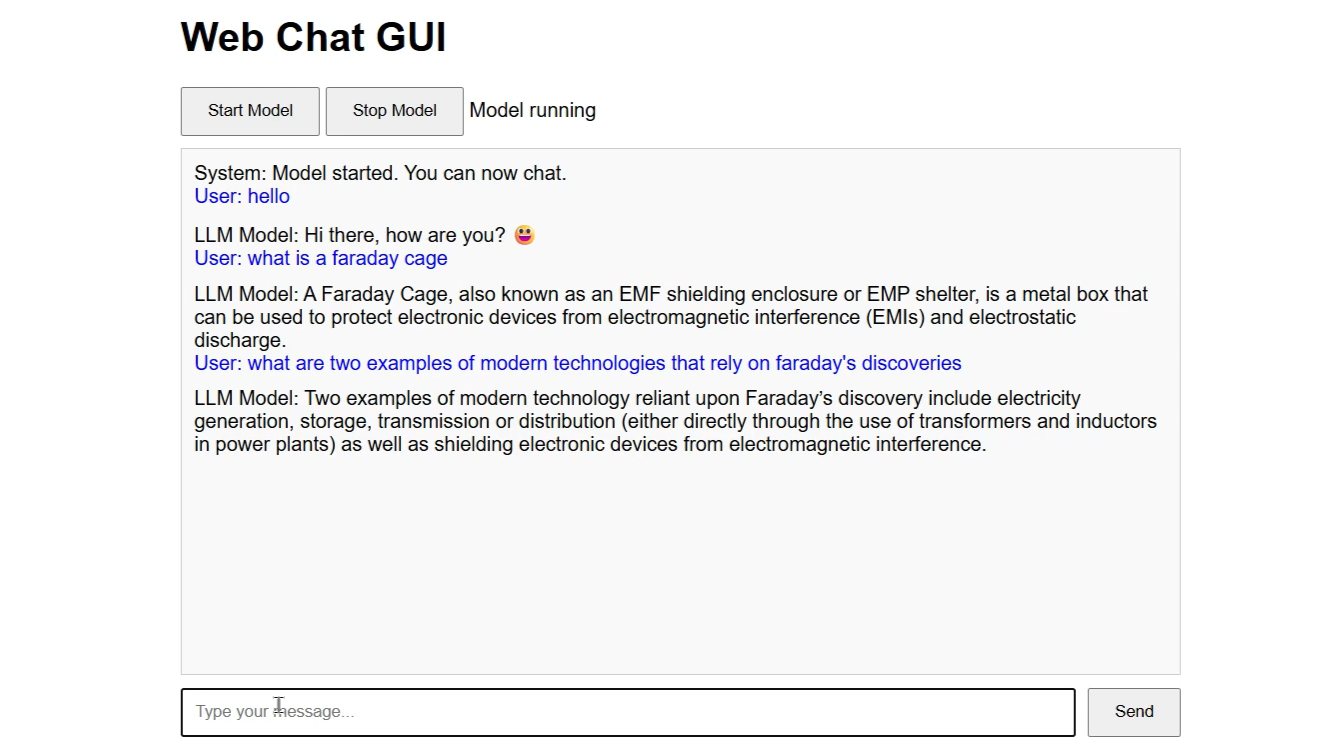

This repository supplements my complete tutorial series on deploying a production-ready Flask application with local LLM inference using a 4B LLM.

- Part 1: Server Setup - Ubuntu server configuration and environment preparation

- Part 2: Flask Deployment - Flask app, Nginx, Gunicorn, and GPT4All integration

-

Private chatbot for sensitive business data

-

Educational AI projects

-

Prototyping AI applications

-

Learning production deployment patterns

-

Cost-effective AI solutions

Note: While this tutorial uses GPT4All as an example, the deployment approach works with any local language model. Feel free to adapt it for your specific needs!